Defining Data Obfuscation

Information jumbling is a strategy for concealing information by changing it into a structure that is challenging to comprehend or decipher while still keeping its central qualities. This keeps unapproved clients from having the option to get to specific data while keeping up with its utility. There can be, however, a trade off with this Data Obfuscation technique, as the more private it makes the data, the less useful the data might become.

Here at hubspot.com.in, our bread and butter is empowering developers to test their software with the peace of mind that they will not be putting their customers’ information at risk. Developers use Tonic to easily access high quality and secure test data. Our platform is industry-leading in the myriad of different techniques it offers, including masking, subsetting, differential privacy, and more. Many of the methods we use are considered data obfuscation methods. What is data obfuscation you ask? In this post, we will take a deep dive into the data privacy-preserving techniques that are data obfuscation.

Obfuscation of Sensitive Data: Why and How

There are various justifications for why one should jumble delicate information:

General security assurance – By and by recognizable data, monetary subtleties, and clinical records are instances of delicate information that your association would need to safeguard against assaults.

Administrative consistency – Information insurance strategies like GDPR, HIPAA, and CCPA are instances of ways associations are considered responsible for keeping up with the security of those people they gather information from.

Information sharing – In the event that information should be imparted to those external to your association, for example, scientists or accomplices, information obscurity can be utilized to do as such without undermining your delicate information.

Testing and improvement – Information utilized for programming advancement and testing can be in danger of openness. Muddling your creation data sets is one approach to permitting your designers admittance to the information they need to deliver the best items while as yet regarding the security of your clients.

Regardless of what kind of delicate information you have, information obscurity should be possible by the accompanying advances:

Information grouping – Distinguish and sort the touchy information in your data set.

Pick a fitting confusion procedure – In light of the sort of information and the degree of security required, one should pick the legitimate method. These include: tokenization, encryption, veiling, speculation, and engineered information age.

Information maintenance and access controls – Whenever you’ve applied the appropriate method to your information you ought to ensure that main the individuals who are approved approach it.

Testing and approval – The muddled information should be entirely tried. This includes approving the information’s value for examination, exploration, and testing.

Persistent checking – The information ought to in any case be routinely explored to ensure that the jumbling strategies are in accordance with changing protection necessities. Information jumbling ought to be seen as a continuous interaction.

Exploring Different Methods and Techniques of Data Obfuscation

There are various ways you can jumble your information.Some options maintain more usability of the data while others are better at keeping the data more secure. The choice of technique implemented should be based on the type of data, the level of protection necessary, and what it is being used for.

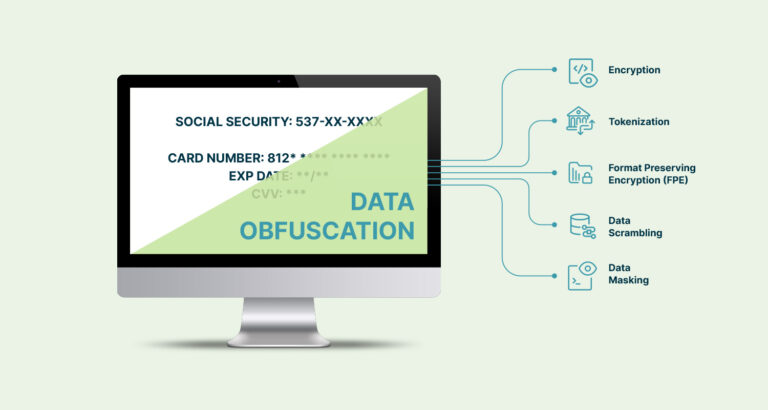

Overview of Data Obfuscation Techniques

Tokenization

Tokenization involves replacing sensitive data with tokens, or random strings of characters while the original data is securely stored.This is best for muddling through and by recognizable data, for example, Mastercard numbers and email addresses.

Encryption

Encryption transforms information into a configuration that is incomprehensible to an aggressor. This requires the utilization of perplexing calculations and the support of a decoding key with the goal that the information can be changed back to its unique structure. There are several different types of encryption that differ based on what algorithm is used to transform it, such as format preserving and homomorphic encryption.

Masking

Technically speaking, masking mainly refers to techniques to hide a certain subset of sensitive data. It is a term commonly used interchangeably with data obfuscation to talk broadly about many of these data privacy techniques.

Generalization

Generalization involves reducing the granularity and specificity of the data by aggregating it or putting it into a more broad format. This is best used for research or analysis that only requires the overall trends.

Randomization

Perturbing the data or adding random noise to it is another way to make it more challenging to decipher individuals from the data. The amount and type of noise is controlled based on the privacy/utility requirements of your obfuscation use case. This method is most useful when the data will be used for analysis or machine learning.

Data Synthesis

In synthetic data generation, new data is created based on the patterns in a real dataset or based on rules defined by a user. When created based on the patterns in a real dataset, the output data cannot be tied back to any individual.

Common Methods of Data Obfuscation

The methods used to execute these techniques vary depending on the data pipeline architecture. Other factors that would influence your methodology might be tooling, programming languages, and of course the specific privacy regulations of your organization. Some common methods include:

- Tokenization mechanisms integrated into processing pipelines and applications.

- Encryption implemented before storage or transmission.

- Custom scripts used to mask data either directly in database views or during data transformation processes.

- Generalization, randomization, perturbation, and shuffling tools that can be implemented to automate these processes in repeatable and controllable ways.

- Synthetic data generation libraries can be used to match the statistical characteristics of the original data.

Again, there are many different methods of data obfuscation. It is important to decide whether these methods be implemented before, during, or after data storage or transfer.

Best Practices of Data Obfuscation

To most effectively obfuscate your data you must first and foremost understand the types of data you are working with. The obfuscation methods you might want to use would be different for structured numeric data versus unstructured text-based data. It is important to be intimately familiar with the risks associated with using your data in terms of how sensitive it is. This helps weigh whether you will want to prioritize utility over privacy or vice versa when selecting the technique you want to use. Also knowing exactly the regulations you are working to comply with will help guide what techniques and methods you choose to adopt.

When choosing what method or techniques to use, make sure you are only considering proven techniques and not just devising an approach on your own. If you do want to develop your own technique, do so with the understanding that there is potentially a higher risk for your data to be compromised.

To minimize the risk of compromising your data it is smart to implement strong access controls within your organization. Not everyone at the org needs to have full access to all of your customer’s information so making sure your access controls are set to a need-to-know basis is important. Further, educating all employees on the data security policies and procedures at your organization will ensure everyone remains on the same page of how to handle data including how to implement obfuscation techniques.

Finally, it is always wise to document your procedures and provide a rationale for each step. This ensures transparency in policies and regulations and can help train new people as well as go back and reform outdated policies.

Tools and Software for Data Obfuscation

There are many different tools out there that can assist in executing data obfuscation properly. Choosing the right one will largely depend on your data management architecture, how you transfer data, and who needs access to it. There are generally three groups of tools: legacy test data management (TDM) software, open source tools, and modern data platforms.

Legacy TDM software typically refers to the early generation of data obfuscation tools. These tools offer data masking, simple encryption, and database virtualization. They were often built with an emphasis on data security over data utility, and as such, the approaches they take to data obfuscation aren’t focused on generated realistic data as the output. This can make their obfuscated data less useful in testing and development. Ease of use and the ability to work at scale with today’s data can also be an issue with these tools, given their more dated approach to test data. Simply put, they aren’t built to work with today’s complex data pipelines and modern CI/CD workflows.

Open-source solutions like Faker are freely available for anyone to use, modify, and distribute, and are generally maintained by a community of developers. These solutions can be great for simpler use cases and smaller datasets but are insufficient for teams needing to work across their production data in an efficient and secure way. The privacy guarantees are weaker and the maintenance demands are high. As the old adage goes, nothing is truly free, and the cost of using open-source solutions is the time they take to set up and maintain.

Modern data platforms integrate advanced data obfuscation techniques with expanded data generation, management, and security capabilities. These technologies, such as Tonic, provide well-rounded solutions for intuitively and securely implementing data obfuscation into your data workflows by way of seamless integrations and automations like fully accessible APIs. Since these platforms arose in the modern age of data lakes and cloud data storage, as well as the age of GDPR and CCPA, they are built to handle complex data, scale with your organization, and guarantee data privacy compliance.

How to Obfuscate Data: Understanding Data Obfuscation Algorithms

There are a few information obscurity calculations, each with its own assets and shortcomings. Some of the popular algorithms used for data obfuscation include the below.

Algorithm | Description |

| Base64 Encoding | Base64 Encoding is an algorithm used to represent images, audio, or binary files using ASCII characters. It’s used to ensure that data can be transmitted or stored in environments that only support text. The data is obfuscated because the result is a seemingly random set of characters. |

| Hashing | Hashing refers to converting input data of any size into a fixed-length value called a hash value or a hash code using a mathematical algorithm. This method is useful for data obfuscation since the input data will always produce the same hash function making it very repeatable. The greatest advantage of this is that it is extra helpful for data integrity and verification. |

| Salted Hashing | Salted Hashing is an advanced hashing method that includes putting a random value into the data before hashing it. This is mainly used to improve the security of hashed data when the information is particularly sensitive like passwords. This is advantageous for obfuscating common values since the generated hash value will be different for each repeated value due to the random salt. |

| XOR Encryption | XOR Encryption uses exclusive OR logic to encrypt and decrypt data. It takes two binary inputs and produces an output where each bit is the result of applying the XOP operation to the corresponding bits of the inputs. |

These are just a few of the many algorithms used to execute different obfuscation techniques.

Final Thoughts on Data Obfuscation

Information muddling is a significant procedure for shielding delicate information from unapproved access. By changing information into a configuration that isn’t effectively conspicuous or reasonable, information muddling can assist with keeping up with the protection and secrecy of touchy information. Grasping the various strategies, methods, and apparatuses utilized for information confusion is fundamental for capitalizing on this way to deal with information security, particularly when authenticity and utility for programming advancement and testing are critical to opening your improvement group’s efficiency.

To look into the confusion abilities of the Tonic test information stage, visit our item docs or associate with our group.

Abstract:

Research community has been highlighting security issues related to network traffic analysis. Using machine learning techniques, an eavesdropper can exploit traffic features to determine useful information threatening the privacy of users. In this paper, we propose an efficient defense against network fingerprinting attacks where we obfuscate information leaked by traffic features, specifically packet sizes. First, we model the packet lengths probability distribution of the source app to be protected and that of the target app that the source app will resemble.

Then, we define a security model that mutates the packet lengths of a source app to those lengths from the target app having similar bin probability. This would confuse a classifier and make it identify a mutated source app as the target app. A comprehensive simulation study of the proposed model, using real apps traffic traces, shows considerable obfuscation efficiency with relatively acceptable overhead. We were able to reduce a classification accuracy of 91.1% to 0.22% using the proposed algorithm, with only 11.86% padding overhead.

Configuration:

- The SDK meta role setting is Blacklist meta and content. With this option implemented, all metadata and all content (packets and logs) are visible by default.

- The administrator has restricted meta keys configured for the Analysts group to prevent viewing of sensitive data (for example, username).

- The packets and logs for any session that includes the username meta key are not visible to an analyst.

Result: Now a user who is a member of the Analyst Group investigates a session. Depending on the content of the session, the results are different:

- Session 1 includes the following meta keys: ip, eth, host, and file The session does not include username so all packets and logs in the session are displayed.

- Session 2 includes the following meta keys, ip, time, size, file, and username, Because the session includes username , no packets or logs from the session are displayed for the analyst.